Linden Lab has announced at the SLCC their continuing support of the open source efforts: the launch of Snowstorm, the project for a new viewer (based on the Snowglobe 2.X codebase) which will get Lindens and third-party volunteer developers working much, much closer together to develop what Philip Linden hopes to be the ultimate viewer, or, as he says these days, “to bring Linden Lab back to the lead in innovation and technology”. Tateru Nino has already commented a bit on the change of strategy; I have to humbly confess that I’m not familiar enough with the Scrum methodology to comment if it’s the best way to deal with a fast pace of development that includes a lot of external developers. Still, some things look interesting: for instance, in theory at least, the Emerald team could branch off one fork of the Snowstorm code to implement their spell-checking functions, manage it as if it were their own personal in-house project, release a build for open testing by any resident, and after a few weeks, commit it back to the main code — and this would become an “official” Snowstorm release (which will happen every other week). Sounds reasonable? Well, yes; it’s also a way to get more people to help out Linden Lab to fix bugs, now that they have fired so many developers (but they still have 175 working at LL on 15 different teams, says Philip).

On the other hand, the other major open-source project at Linden Lab, intergrid connectivity, seems to have been dropped, possibly indefinitely. This is indeed bad news. Around this time, the IETF should have been able to come up with some relatively final standards for the intergrid protocol VWRAP. But if Linden Lab drops their commitment to it, the future is not very bright. On the other side of the coin, we have the OpenSimulator community with its amazing Hypergrid protocol, currently at its 1.5 incarnation, and which is simply mindboggling in the way it operates.

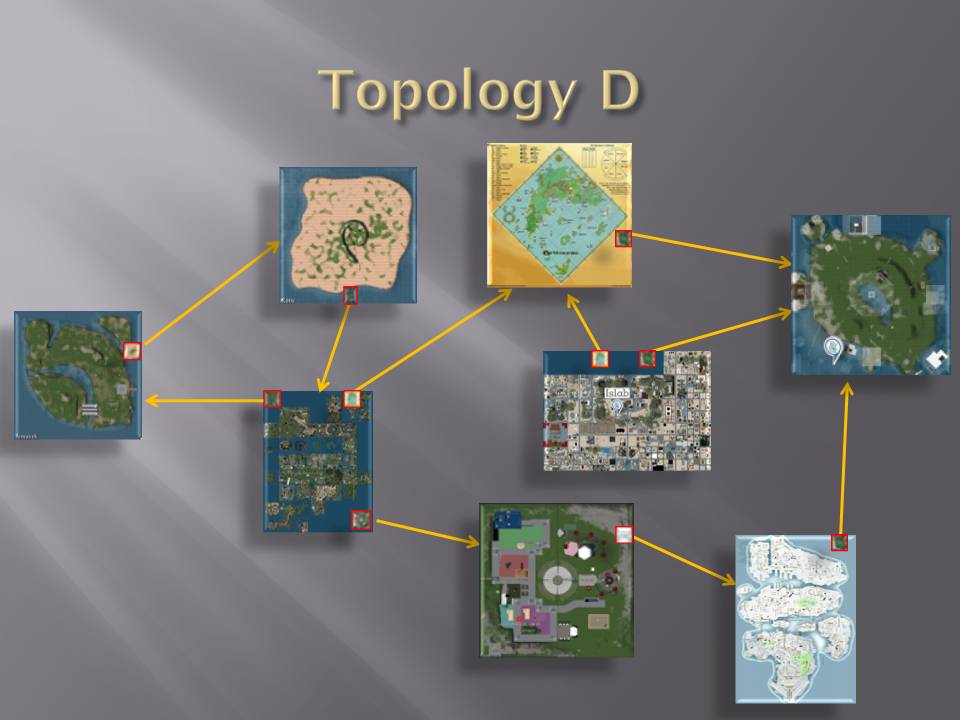

I had to try it out for myself 🙂

An image is better than a thousand words, a video is better than a million…

The best way, I guess, is to show a video, and this is what I’ve done. Please understand that pretty much everything you will see runs on low-to-average hardware and connectivity. After you watch it, some explanations are due…

On the above video, I mostly wanted to make three points. First, and perhaps the most important point, it works. And it works way better than I imagined. Secondly, that OpenSim, in spite of its insanely fast pace of development, is still very flaky — some might compare it to what Second Life did in 2002 or early 2003: most things work some of the time, but failure is usually catastrophic. And thirdly, we’re slowly getting all the pieces assembled together: with every new iteration of OpenSim, it comes closer to become what people have in mind when thinking of a true Metaverse — lots of virtual worlds, each running independently of each other, but all joined together in a chaotic weave of interconnections between each other. While some of the steps shown on the video might not be very impressive yet, the underlying technology is everything we have been hoping that Linden Lab developed and that we asked from them as early as 2006 or so. It’s more than merely a “proof of concept” or a “nice prototype”: now we can actually use it, even though patience is required — the technology is at the alpha stage, and will remain so for many, many years.

You’ll see that I often stop to type strange links in chat, and then click on them. This is how Hypergrid teleporting is implemented, and it’s viewer-independent; it also works on the SL viewers. For instance, while a link to a sim on LL’s SL Grid is simply secondlife://Beta Technologies/128/128/40 (if you copy this and paste it on your viewer, you can click on the link, and teleport there), for links on OpenSim grids, you just add the grid URI: e.g.secondlife://opensim.betatechnologies:8002/Beta Technologies/128/128/40

should allow you to teleport from any OpenSim grid with Hypergrid 1.5 to my company’s sim on our own grid. The secondlife: tag just describes the protocol, not the grid. So, yes, there are ways to distinguish grids pretty easily: the login URI is unique for every grid.

Some background on the underlying technology

Let’s scroll back to the beginning. Linden Lab’s Second Life Grid® is a technology based on a set of fixed assumptions. There is a large array of central servers (which nobody outside the ‘Lab knows what they are or how they’re configured). Then there is a vast number of some 5,000+ servers, all using relatively similar configurations (servers of the same class have similar hardware specs, and they all run the same software), which, in turn, run the simulator software (sims), each region running on its own virtual machine (4-16 can run on a single server, although semi-official rumours claim that LL has been experimenting with higher density of CPUs, and may have one day 64 openspace regions on a single server). All this is automated: from the installation of the operating system to the region simulator, it’s all done with point-and-click configuration to set them up when someone buys a region from the Land Store.

Things started to become confusing when Linden Lab started to move out of a single co-location facility. Now all of a sudden, some sims would be running outside the network where the central servers were. Apparently, the addresses of those servers are hard-coded in some way, so the solution was to create first a VPN between co-location facilities (which are a software-based solution to make external servers “look like” they are in the same physical network), and, later, to simply run fibre between the co-location facilities, effectively placing the servers once more in the same physical network.

Some comments made by the engineers (like this explanation by FJ Linden, which followed a more thorough description of how the grid is physically implemented) explained that some co-location facilities actually ran some of the central asset servers: Dallas and Phoenix apparently have their own central servers, while Washington DC has not. It’s not clear if they’re just clones of each other and kept in sync (like during an earlier implementation). Also, thanks to HTTP downloads of assets, implemented last week, now the core of all requests to the asset servers can be pushed into the Amazon S3 cloud, saving precious bandwidth, and providing Linden Lab an increased layer of reliability — Amazon S3 is way more stable than LL’s own setup, merely because that’s the core service they provide, and cloud technology is currently the best known way to provide almost infinite redundancy.

Nevertheless, what is important in this picture is that all of the Second Life Grid runs on a unified system. There is just a single architecture for the whole grid. Of course, individually, there are thousands of servers to track down, but all work pretty much the same way.

Now contrast that with the rest of the Internet! Each system, each network, each computer, each node is different. Servers and active network devices have all sorts of possible configurations. From smartphones to high-end routers, from home-run Web servers on old hardware to complex cluster solutions and massively parallel supercomputers, to cloud computing — each system is uniquely managed, and each architecture is different. Google might have more servers than Amazon or Microsoft, and although their specs are the same on all of Google’s co-location facilities around the world, their configuration has nothing to do with what their competitors have — and vice-versa. There is no “standard” way of connecting servers and networks to the Internet-at-large, except for one thing: they all speak the same protocols.

The difficulty that Linden Lab had when they started moving servers to a different co-location facility was that their architecture was never planned to be anything but uniform. Merely having “two locations” introduced a new layer of complexity that was never foreseen. Sims, although pretty much independent from each other, were designed to work in the same network and connect to the same central servers. Everything was designed from the ground up having one grid in mind.

The heterogeneous OpenSim

Enter OpenSim deployment. Just like the Internet, there is no one-size-fits-all technical solution to implement them. It runs (at least) on Windows, Mac, and Unix back-ends — in fact, on anything that runs Mono. And several different Mono versions are possible (although early ones might simply not compile OpenSim). Servers can be co-located at high-end data centres with unlimited bandwidth, or behind corporate/academic firewalls, or just underpowered PCs in a basement connected to a home ADSL solution — or run from your laptop. OpenSim can be configured either as a standalone version or have separate central servers. The whole range of configurations include standalone sims connected to external central servers, all central servers on a single machine or on separate ones (that includes moving the database servers out for increased performance and reliability), or Hypergrid-connecting several servers which are technically under the same grid, but actually have separate storage on different places. Servers hosting sims are not limited to a single location: they can be spread around the campus, or even around the world, according to needs or capabilities. For instance, a multi-campus, multi-department university or corporation might have sets of servers running at each department but keep the central servers for all the organisation on the main data centre. Or each department might have their own central servers. Or students (or employees) might just add their own regions running from their own desktops and connect “on demand” at will. Finally, unlike some have claimed in the past, all this can be made secure, using passwords and encryption to make sure that only allowed regions are actually interconnected with each other.

But it’s not just about running OpenSim in the same organisation. Thanks to Hypergrid, you can interlink different grids from completely different organisations. You set the level of trust you wish: you can set aside some public regions on your grid and allow everybody to teleport to them; or you can conditionally allow just incoming teleports from grids you trust; or simply shut the whole world out of your grid. Hypergrid links are actually just URLs, and like on the Web, if you know the link, and it allows incoming users from other grids, you can then teleport to them. The analogy to the Web is really almost flawlessly implemented.

It has a twist, though, one that was not implemented on the Web as Tim Berners-Lee designed it. Avatars are not simply “dumb viewers”. They carry identity with them: a name, their origin (the grid they come from), and, of course, the avatar’s precious inventory. The equivalent on the Web would be the ubiquity of identity providers (Facebook logins, for example) where your profile data is “carried” with you when you log in to a third-party site with a Facebook login. The difference is that on the Web there are no standards (OpenID and Oauth are two attempts to standardise the process, but the Web is not consensual about a single protocol to deal with identity — you just have to deal with a multiplicity of different identity providers, and support as many as you wish). Also, “identity” on the Web is rather limited in what is stored: mostly just the name, email address, and sometimes the profile picture is carried from one website to the other.

On the metaverse, the avatar and its inventory — plus the 2D profile info — are part of the identity as well. And Hypergrid 1.5 neatly addresses it quite well. You really have to experience it to get fascinated. All your inventory comes with you when you log in to another grid, but your items are not immediately available to the other grid even though you see them in your inventory — you need them to be rezzed first. When you do that, the item is tagged with your creator tag (even across grids!) and with a set of permissions you assign to it. Similarly, if you buy content on a foreign grid, and teleport back home, it gets stored in a special new system folder called “My Suitcase”. These flag content from external grids, which are available on the foreign grid central servers, and which only move to your region if you rezz them.

Two other layers of absolute coolness have been designed into the system. One, of course, is what content is allowed to be transferred. You can configure OpenSim to not allow any content to move out of your grid. Similarly, you can prevent content from other grids to be brought into yours. While critics might say that this doesn’t cover all possible options, it goes a long way to implement minimal protection. Obviously that a grid owner can see a rezzed object and copy it directly from the database, and you can’t prevent that from happening — but that’s exactly the same argument as being impossible to prevent your avatar and attachments and rezzed objects to be copybotted on the Second Life Grid. There is simply no way to prevent that from happening.

But at least you have some control. If you do not rezz an object in a foreign grid, nobody can get that object forcefully out of your inventory — exactly like on the Second Life Grid. A visible object, of course (just like in SL), can always be copied illegally.

Giving OpenSim users the same kind of content protection that they get in Second Life (not more, not less) has made some content creators be bold enough to start selling that content on some OpenSim grids. And when I say “selling” I really mean a working economy with the possibility to exchange virtual money with so-called real money. And working across grids, too. All this is not only possible, but it has been fully implemented by the Austrian company VirWoX, who run a money exchange for several grids and for Second Life as well. This actually means that yes, you can transfer L$ to a few OpenSim grids… or use any of the many payment services (including bank transfers!) to put some money there. The important thing is that even though your “favourite” grid is not among the ones listed, you can still get “Open Metaverse Currency” dollars, and just teleport to a grid which accepts them, shop there, and go back to your “home grid”. The underlying technology has been co-developed with the University of Graz, and dozens of more grids are currently beta-testing the concept, so I imagine that the list will grow — as will the number of “competing” exchanges, of course.

Something you won’t see on the video is testing voice or cross-grid profiles. Voice is quite interesting. As some of you might now, Linden Lab uses Vivox as their voice provider, probably because Vivox has a lot of experience with providing VoIP for games and virtual worlds. Well, you can buy a license to use Vivox with OpenSim too. But you have far more options. OpenSim supports open-source VoIP providers as well, Freeswitch being currently the more popular choice, but it’s not the only one. The interesting aspect of voice is that you can interconnect VoIP switches with each other and with the phone network. So, yes, this means that in theory you can talk on the phone with someone in OpenSim, much like the (now closed) AvaLine service once provided by Linden Lab. Depending on the technology used, spatial sound (like in SL) might even be supported as well.

Then there is cross-grid messaging, cross-grid profiles, and a lot of other services that can optionally be provided across interconnected grids. They require special plugins and a backend server to deal with them (popular options include SimianGrid, which is a separate project, but well integrated into OpenSim. The beauty of the technology is that it’s all Web-based with neat APIs. This means that all can call functions from remote servers pretty easily.

So… to recap, this is all that works in OpenSim these days: intergrid teleportation with cross-grid content transfer, with permissions and creation tags; groups; inter-grid profiles and communication; voice with telephony integration. Not too bad! And it still manages to do all of that fully compatible with the latest official SL viewers. If you use a tweaked viewer like the ones from realXtend, you can even get meshes; and some minor things, like saving WindLight settings on each sim, or having prims over 10 m, also work nicely. As does sim backup and inventory backup… and so forth.

Caveats

Not everything is bright and shiny, though. The major difference these days is the comparatively low stability of OpenSim. It’s far too easy to crash sims. Dealing with a lot of avatars in the same place is also not so easy as in SL: performance degrades very quickly. Overall, it looks and feels like SL in 2003, even though OpenSim replicates pretty much everything that SL supports today (active work is being done on supporting HTML-on-a-prim as well), except perhaps for the more complex land and group operations and adds a lot of extra features.

Then there is the whole issue of configuration. Although you can install the Diva Distro or the OSGrid distro (which will preconfigure your sim to immediately join OSGrid, one of the uncountable independent grids out there, and one of the oldest and most popular) on Linux, Windows and Mac users have no equivalent solution. It means a lot of time to learn how to properly do it, and documentation is sparse and mostly incomplete: OpenSim’s eternal “alpha” stage of development means that getting help for it is hard, and usually done via IRC or the official mailing lists, and on some independent forums. Some grid operators also offer a degree of technical support. But it’s still hard, compared to using any other Internet application server.

And that’s just for a standalone server. Creating your own grid, especially if it’s for more than just a quick test, is overwhelmingly complicated: you not only require good system administration skills, but also a thorough understanding of the actual codebase, and the way all components interact. These days, 13-old kids can easily turn their home PCs into a full-fledged Web server (or games server!) by just installing a few packages according to a “recipe” — and the defaults will be good enough to get reasonable performance. It’s just when you need to deal with dozens of thousands of daily hits on your webserver that you need to worry about tweaking the system.

With OpenSim, except for quick tests on standalone servers, it means dealing with the architecture from the very first day — unless you couldn’t care less about installing OpenSim but just about using it.

But even becoming merely a “user” is not as easy as in Second Life. There are literally thousands of grid operators. Although all could theoretically be integrated with each other, in practice not all have Hypergrid 1.5 enabled. A few grids still support Hypergrid 1.0, which has far less features and has little IP protection (it was a great way to test the concept, though!). Ironically, the larger the grid, and the more popular it is, the less likely their operators will turn on Hypergrid, or even have the sims updated to the latest versions. While I might just be mean, I think they do it on purpose — very likely for the same reason that LL has abandoned interoperability: they want to capture their own share of users, not let them roam freely across grids, since their revenues come, like LL, from tier costs and eventually from their internal economy server, and the more registered avatars they have on their grid, the better.

This means that some events happening on one popular OpenSim grid might be available only to the users registered on that grid and be closed to the others. Of course, most grids are open and allow easy creation of avatars — but then it means starting all over again.

Imagine a Web where in theory everybody was using HTTP for the protocol and HTML for rendering the web pages… but in some cases you could only reach the page if you were a specially registered user on that website. That’s pretty much what all those OpenSim grids have: all are potentially reachable via Hypergrid, but only some actually allow it. It feels strange… until you remember that Facebook, LinkedIn, Plaxo, etc. work similarly: only registered users that are friends of each other get full access to each other’s pages. Visitors, finding one of those pages througha a Google or Bing search, will just get limited access (or no access at all).

Once you’ve logged in, and even teleported across a few grids, there comes another obvious problem: search. OpenSim allows all sorts of hooks to enable content to be exposed to external search engines (similar to what LL does on the SL Grid, where you can, indeed, search for residents’ profiles on Google, if they’re checked to allow publishing on the Web). But this doesn’t work that well — yet. There is no “universal” intergrid search: at best, you can search for groups (works well with an external module) and residents on the grid you have registered with. Map search works very well inside the grid you’re currently on. However, you can’t search for, say, a specific sim which you remember to have visited once but have no clue on which grid it was.

Content search doesn’t work at all, at least definitely not on the standard OpenSim installation — many grid operators offer either an in-world search or, just like XStreetSL/SL Marketplace, they simply have their own web-based marketplaces. I haven’t tested if they can actually deliver content across grids; my guess is that you have to be logged in on the grid where the content is hosted, or the delivery will fail (but your money will be gone!). No, there isn’t yet an “intergrid marketplace”, although I can imagine that soon someone will attempt to do that. It shouldn’t be too hard: once you’ve designed your marketplace for a grid, and thoroughly tested it to see if it works well, it should be able to deliver content on any grid where you can place one of those merchant boxes. Each grid is usually identified with different tags from the perspective of the programming, and it’s easy to figure out which sim on which grid hosts the merchant box.

Some things which we take for granted actually work differently, and in unexpected ways. For instance, if you IM someone from your grid who is offworld, and your grid operator has enabled IM-to-email, you might get the IM on your email (sometimes it doesn’t work… don’t ask me why). But if you are online on your grid, and your friend is on a different one, this won’t trigger IM-to-email. Giving items is also a bit tricky. You cannot give someone else an item unless you are both logged in on the same grid, no matter what your original grid is. And I don’t think that befriending people works across grids, although, granted, I haven’t tried that often enough. So there is not yet an intergrid presence system. Since, however, most of the underlying communication layers have been moved to Web-based calls, I imagine that cross-grid presence might get theoretically implemented in a future release of Hypergrid.

As a sidenote, one of the projects that Philip announced as part of their priorities is fixing their whole presence mechanism. Although this might sound absurd to many, there is really just one communication protocol: IM, which is asynchronous and uses UDP messaging. Sending L$ is a specially formatted IM (that’s why you never know if the money reaches the destination, it’s not an atomic transaction!); so are content transfers, and, of course, group chat. When someone comes online, an IM is sent to every friend who has you on the list saying, “X is online” (that’s why it’s always a good idea to turn that setting off — it will dramatically improve your SL experience!). Everything is IMs! Until recently, even IMs sent across sims would be implemented, well… you guessed: with IMs between servers. 🙁 (Note: I understand that this might have changed recently)

OpenSim is a bit cleverer. While the client requires IMs, group chat, money, and content transfers to be received as IMs (and sends them as IMs to the grid), the server is up to the OpenSim developers. What they do is to have the sim funnel all those messages to a “Messaging Service” and distribute it from there. At the sim level, you can configure some special hooks to allow messages to go elsewhere; one of the first popular modules for OpenSim was to hook it up with an IRC server, where sims and groups become channels and personal IMs just become private IRC messages. The beauty of that is that IRC, although old, is a very familiar protocol which has long passed the test of time and is known to be able to handle nicely millions of users in a distributed way.

Nothing requires using a SL-compatible “Messaging Server”, though. You can totally replace it by something else — say a Jabber/XMPP server, and redistribute presence, messages, money, and content via a XMPP federation. Hook it up with GTalk, and with a plugin, you could in theory send content and L$ from GTalk to an OpenSim grid and vice-versa 🙂 This separation of layers exists in OpenSim since, oh, 2007, I guess; LL is just starting to do the same thing for their own grid…

So I can imagine that it should be easy to replace the current Messaging Server by something that works across grids. After all, they already did that for profiles, too.

Nevertheless, the same caveat applies: while in the future some grid operators might allow their own Messaging Servers to talk to others, some may not. You can see a pattern here… although OpenSim allows a lot of nice extra features, and all recent versions implement them neatly (or at least provide hooks to allow them), that doesn’t mean that the grid operators actually enable them. As more and more people register for an OpenSim grid, possibly lured by the ease of jumping across grids, and start adding friends here and there, and buying content on some grids and bring them to others, they might soon find out that each grid works slightly differently than others. My typical example is the Animation Overrider: only in OpenSim 0.7.X I got one version consistently working fine (I understand some people have simpler variants which have been working for a long time). In theory, I should be able to use my AO on any grid I can teleport to using Hypergrid 1.5, because only OpenSim 0.7 supports that; however, many grids haven’t yet upgraded to 0.7, or have their specially-tailored version of OpenSim, and the AO might not work on those. In Second Life, a scripted device bought anywhere will work on any sim. On OpenSim, you never know if things will work or not on other grids…

In a sense, what we will need in the future is some sort of “OpenSim Consortium” — an organisation of grid operators — that establish some guidelines on the minimum set of features to be offered to all OpenSim users, so that people don’t get any nasty surprises about what they should expect to work. To be honest, that won’t happen soon. Many have hoped that this “consortium” would actually be led by Linden Lab, IBM, Intel, and the remaining charter members of VWRAP — which is, for all purposes, based on LL’s own ideas on how intergrid teleporting should work, with a lot of encryption code to establish authentication and authorisation tokens contributed by IBM. The problem is, as said, that LL dropped that effort. VWRAP is also insanely complex to implement compared to Hypergrid, although, to be honest, all OpenSim installations are actually able to use the very first implementation of the protocol, then named “Open Grid Protocol”, which did allow teleports from LL’s Preview Grid to any OpenSim grid (without any content transfer — not even your avatar would show up! — and you couldn’t add items to your inventory). OGP was really just a proof of concept; LL doesn’t even support it any longer, even for testing purposes, although Snowglobe still has all the code for it.

Also, it’s important to understand that each grid operator has their own terms of services, and in most cases, they bear little resemblance to LL’s own. Content acquired on one grid that has a strong commitment to protecting intellectual property can be theoretically bought and brought to grids that have no such commitment, and if something fails (like the permission system breaking), there is no guarantee that the grid operator will do anything about it — or even care. There is no universal Abuse Report system, and not all grid operators implement their own systems. Being rude to someone on one grid and getting banned does not mean that the same avatar cannot jump to other grids — or create an account elsewhere and use Hypergrid teleporting to come back to the original grid where they had been originally banned. Even more importantly, not all grids have the same paranoid US fear of contaminating young people’s minds with the power of virtual worlds; ReactionGrid, for instance, allows K-12 kids to freely mingle with adults on the same grid, without any restrictions (except the ones imposed by the sim owners themselves). Puritans will be quite frustrated to know that non-US grids can allow legitimate casino operators to establish in-world gambling legally, and there is nobody that can prevent that (of course, illegitimate casino operators will be punishable by local laws, but it’s highly unlikely that the grid operators won’t be affected by that — they will just need to take down the illegitimate casino operators to comply with a court order, and go on with their business as usual). Put it simply, for the better or the worse, Linden Lab’s Terms of Service do not apply to any other grid except LL’s own. For some this might be a paradise; for others, a nightmare come true…

Conclusions

Every time Linden Lab makes another drastic announcement which will outrage a chunk of the resident community, we get a new round of self-styled prophets that announce LL’s (and SL’s) upcoming end. This gets so frequent that Philip even mentioned it on his SLCC keynote speech! The truth is, LL is not going anywhere, and SL is probably going to outlast all those doomsday prophets, and we have nothing to worry about.

Nevertheless, it’s true that changing conditions might push more and more users out of Second Life — not because it “fails” or “ends”, but because their tolerance of LL’s constant blunders and fiascos has faded, and they are ready to move elsewhere. Universities and even businesses, although for different reasons (e.g., costs) might not see any point in remaining in SL any longer. However, all of them enjoy the “Second Life experience” and are not necessarily interested in going to a completely different virtual world (or go play Facebook games instead). Content creators, for example, might have accumulated over the years a lot of content and patiently acquired a skill set which took them a lot of time to develop, and they’re reluctant to drop everything and start from scratch on an unfamiliar virtual world where everything is different, and nothing can be re-used (not even their reputation!).

OpenSim is the choice for all of those people who love Second Life but hate Linden Lab. Of course, it requires some patience with OpenSim, too. Although OpenSim sports many features that Second Life doesn’t have, some of them — like grid interconnection — Linden Lab has completely wiped out of the picture, it’s a far less stable and complete product, subject to many caveats. Even though setting up a sim from a pre-configured distribution might make the setup effort simple enough, actually maintaining an OpenSim grid that supports the same level of social interaction as LL’s own Second Life is extremely hard (if not even impossible!), and requires high-end servers on tier 1 co-location facilities — not old PCs on someone’s home — and expert system administrators with a lot of expertise in OpenSim development to keep everything working smoothly. That combination of skill and hardware is not so easy to find at most grid operators and only a limited number of grids can actually boast of providing a service which is good enough for more than casual use.

Ultimately, the choice for 2010 is still leaning mostly towards SL as the better choice — no matter how much we blame SL for the constant crashing and inventory loss and utter lack of customer support, it’s far, far superior to the best of all OpenSim grids (which is still an alpha product, even though it’s impressive). People selling their land in SL and leaving in fury for OpenSim full of expectations that they’ll get pretty much the same experience will be immensely disappointed. Not to mention that only a tiny fraction of the content is available on OpenSim… even though it grows, and it gets better all the time, most of the best experienced content creators are still on SL and afraid to open shop on OpenSim.

But on the other side of the coin, if you just wish to join a virtual world which works most of the time, have patience to endure glitches and low performance, can be far cheaper in land, and doesn’t subject you to LL’s whims and ToS, OpenSim is the perfect choice for you.